Journées d’Informatique Musicale (JIM) 2015

Faculty of Music, University of Montreal

Technology – the expressive extension of my artistic sensibility

Keynote

Hans Tutschku

Harvard University, Cambridge, MA, USA

It is a great pleasure and honor to speak today to the JIM participants. My subject is the ‘Technology as an expressive extension of my artistic sensibility’. I do not see technology only as a means, but as central to my thinking and the design of my pieces.

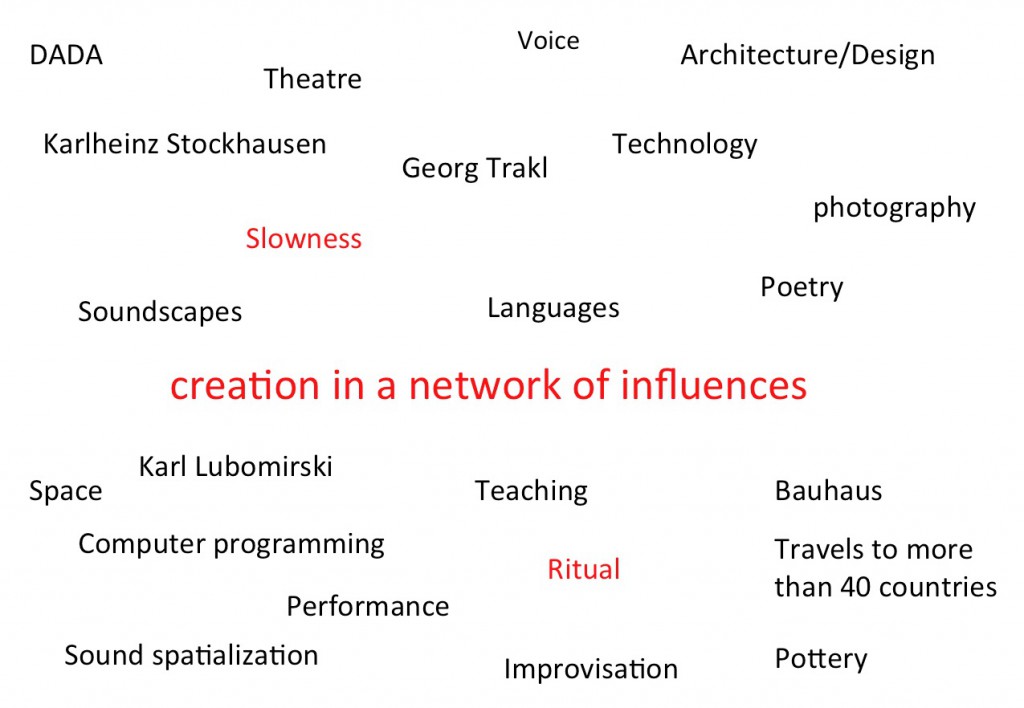

Curiosity is something that interests me in all kinds of activities, in education, and in interaction with other people. I want to create situations where someone can develop an inquiring mind and be open to the world. I myself had the chance to travel a lot and to get introduced to many different forms of creativity. I studied drama, music, painting, photography, ceramics and all this is somehow connected with technology and with computer programming.

I will divide this presentation into four parts. The first is about education and creation. Then, I’ll talk about the question of gesture and about a few ideas on multimedia and installations. I’ll finish on iOS devices and some opportunities that I have explored in this area.

education – creation

My journey began with a dual education in music and theater, and I see my creations like activities in a network of influences.

As my parents are musicians, I had the chance to learn the piano very young and I joined a theatre group at the age of 13. But there were many other factors that had a strong influence on my education.

Being born in Weimar, a very cultural city with the Bauhaus, with classical music, with Bach, Liszt etc.; also to have begun to improvise very young. When I was 15, I joined a group that I will discuss later some more.

Here are some questions:

- Who I am as an artist?

- Is what I do genuine?

- How do I digest and rework artistic influences that I admire?

- And by doing so, how I do not become a clone, but how do I find my own voice?

Another issue that is quite central to my thinking:

- How much should I be an expert in a certain field to present my results to the audience?

Here are two words that are very present: integration and confusion. How can technology be integrated into my work and at the same time how can it generate a situation of confusion and surprise?

I will demonstrate how the use of computers – and technology generally – has changed my compositional thinking.

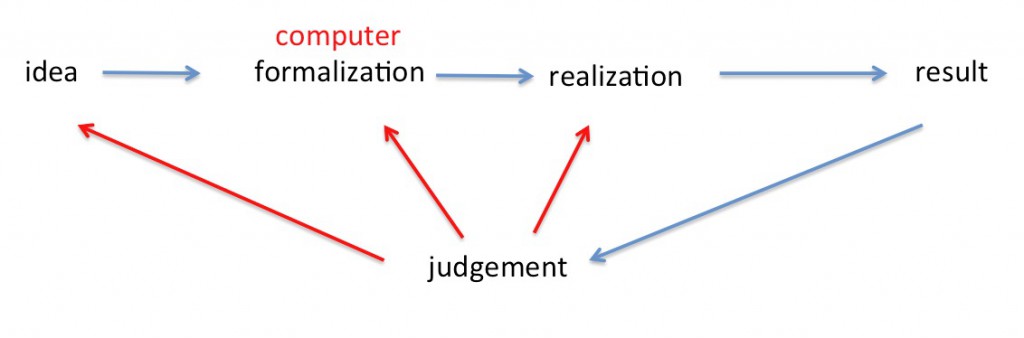

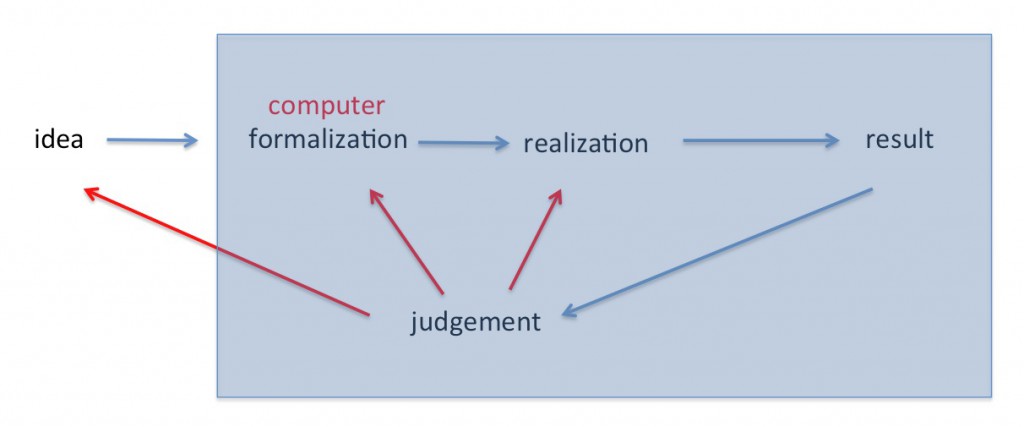

If we imagine the creative process schematically, we could say that we develop an idea, we realize it and obtain a result.

I take my pen; for example, I write some notes on paper and I observe it. My judgment will point to both the realization and to the idea. I will go into a recursive loop, which gradually changes the idea and the way, how I realize the idea to improve the result.

When technology enters the game, it gets a little more complicated because there is one more step necessary: formalization.

How can I make the idea understandable for the computer or the technology?

The result will again provoke a judgment that now points to the idea, the formalization and the realization. If the result is not satisfying, it’s now less clear where the problem lies: in the idea, it’s formalization or realization.

Another problem while using technology is a trend to not question the idea anymore. As soon as we get a result, we spend a lot of time to improve the formalization and implementation.

There is the risk that the judgment – as result of an obsession for the technology – forgets that the idea may also require questioning. I try therefore, to keep a certain distance to the magic of technology.

Ensemble für Intuitive Musik Weimar

I joined the ensemble at the age of 15, and it became a place of incredible training. From the start, we worked on pieces by Stockhausen, Cage and other composers who were not represented in Weimar during the communist regime. Accompanying Stockhausen after 1989 on his concert tours, and working with many improvising musicians trained me and let me focus on an interesting question: what is an instrument?

Playing with a pianist, cellist, and trumpeter in the ensemble, I’ve realized that the question for them did not arise. Although with piano preparations one can expand the produced sounds, the instrument remains pretty much defined.

Jokingly, I sometimes say that a person would not learn the clarinet if he should be afraid that in six months there was an update that puts all keys to another position. A certain stability in the relationship with an instrument is important in order to develop reflexes.

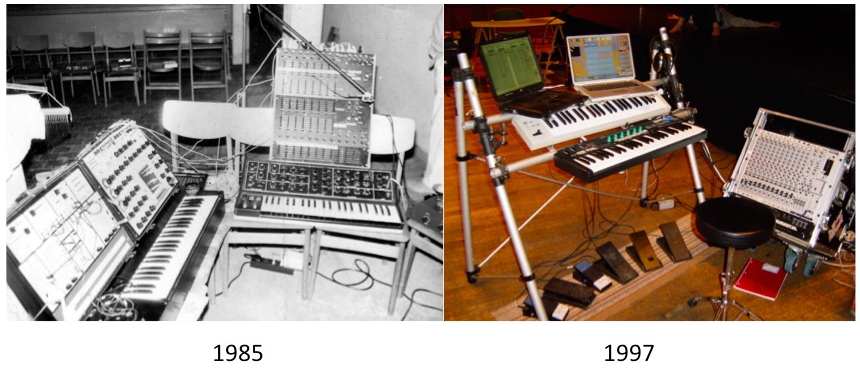

I worked for years to gradually increase the opportunities of my instrument and to refine it. But since 1997 its functionality has not changed. There are two keyboards, two computers, and a few pedals to control parameters.

These reflections are part of a technological change that I experienced during the past 30 years.

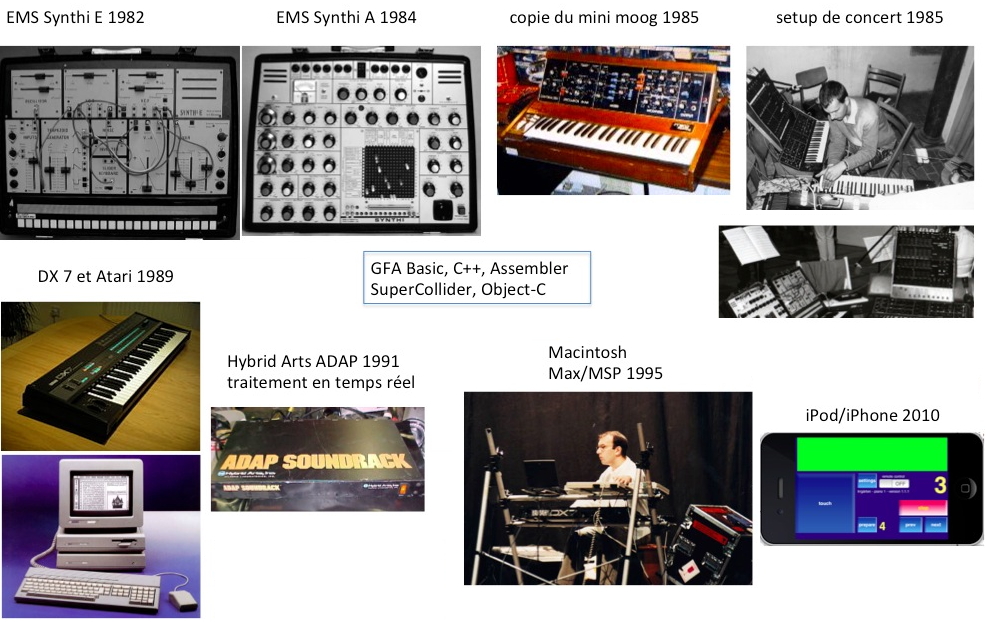

In 1982 I had my first analog synthesizer, the Synthi E by EMS. Later the Synthi A, its big brother. Eventually, someone got the construction plans of a Minimoog and copied it, because all these devices were not on sale in East Germany.

For my first electroacoustic composition, I cut the tape in cassettes, because I did not have a tape machine with reels.

I got my first computer and a DX7 as a gift from Markus Stockhausen, the trumpeter son of Karlheinz Stockhausen in 1989. Markus had come to Weimar almost every summer since 1982 to play with us.

Subsequently, I worked with different programming languages. In 1990/91 I studied ‘Sonology’ in The Hague in the Netherlands and followed a course on real-time sound treatment. I also followed a demonstration by IRCAM exploring their workstation with the NEXT cube and three DSP cards, which costs a fortune. I was thus eager to find an affordable solution for real-time sound manipulation.

Hybrid Arts had produced an expansion equipment for the ATARI computer with audio converters and Texas Instruments processors to turn the computer into a 16-bit sampler. But this product sold poorly. Onstage musicians prefer a keyboard with everything integrated, instead of bringing three boxes to be interconnected and a computer screen. They discontinued the product and sold it cheap, together with a programming manual.

I therefore learned C for the interface, Atari assembler for the communication with the converters and TMS Machine language to program the DSP processor from Texas Instruments.

At that time signals were not calculated as blocks (signal buffers), but each sample after another. I had 20 nanoseconds between two samples and could see how many arithmetic operations or memory access cycles I could fit into.

For one year I ‘went deeper down to the heart of the machines than I could ever imagine. In 1991 I had finished a program that allowed simple granular synthesis, transpositions, delay lines, etc.

As I did not want to travel with that Atari tube screen, I had programmed it in a way to be able to leave it at home. At computer startup, the program loaded and specific keys were associated with treatments. For some visual feedback, I connected two LEDs, a red one for times of sound acquisition and a green one for any playback. The lesson learned: without watching a screen I listened differently. My communication with the other musicians was not absorbed by visual feedback.

Since 1995, MaxMSP became my main platform and I was very careful thereafter, as how to interact with the software during the concert and to limit the need for visual interaction.

The last few years I have also experimented with iOS devices and will talk about it at the end of this presentation.

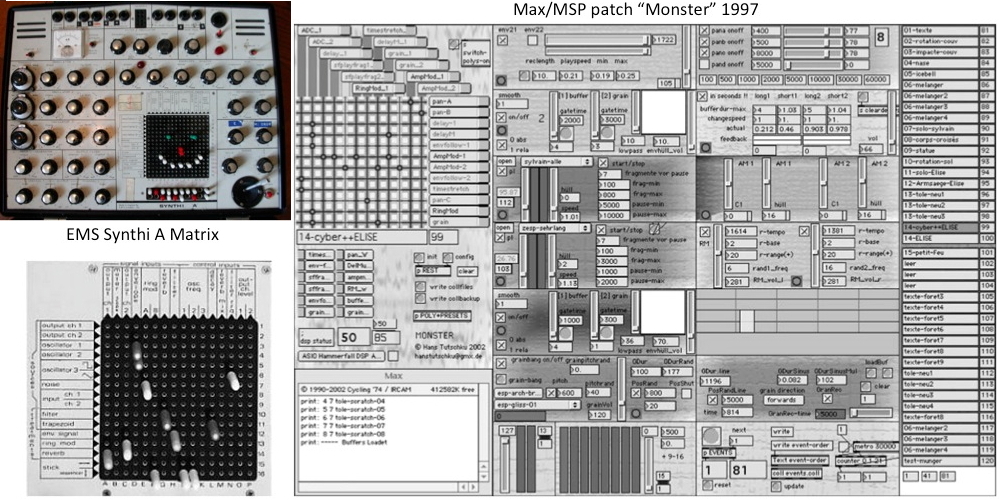

Learning the analog synthesizer served me well. The Synthi A was a modular synth with a connection matrix. The modules were not connected by default, and the audio and control signal flow could be determined by freely interconnecting outputs and inputs of modules.

One could simultaneously use the microphone signal to control parameters. I explored this possibility a lot in order to link the evolution of parameters to the intensity of the incoming sound.

My first improvisation patch with MaxMSP in 1997 was called ‘monster’, which I’m still using. It underwent updates to MaxMSP 3,4,5,6,7, but the idea has not changed. The patch is also organized in modules and contains a matrix. It has also signal analysis modules to use the microphone for parameter control.

Gesture

The physical gesture of instrumentalists and dancers was of great interest to me over the past 30 years. My music education on the piano, and later with live-electronics taught me to perform music, long before I started composing. Therefore, gesture is central to my musical thoughts: the act of making is inseparable from the sound quality.

All the music I wrote, whether for instruments, singers, or electronic sources, is in search of the expression of gestural phrasing, the relationship between cause and effect (and its negation) and a plausibility, informed by our experiences outside of music.

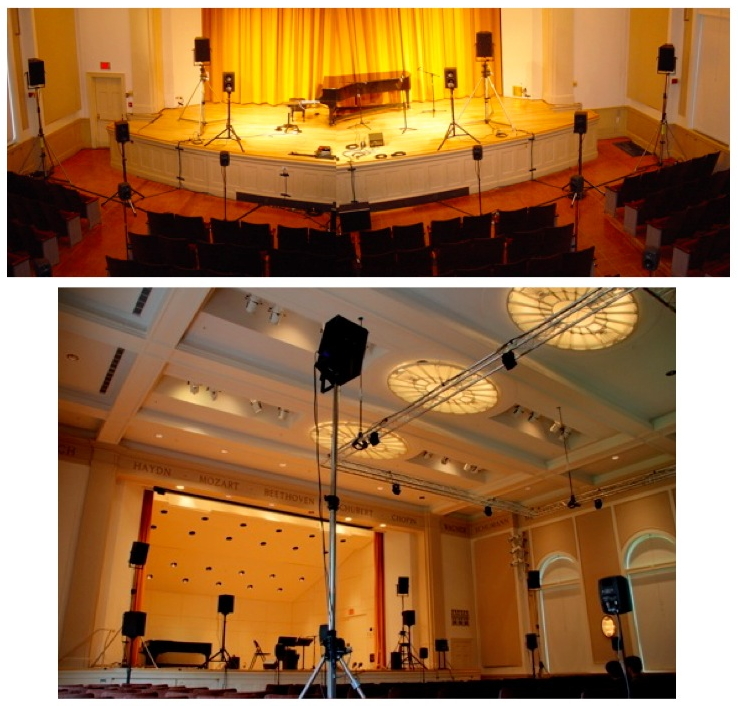

The gesture is also important in my spatial thinking, both in composition and in the interpretation of works on loudspeaker systems. Here are some pictures of Harvard’s diffusion system ‘Hydra’ with 40 speakers.

Das Bleierne Klavier / Zellen-Linien

I’ll speak now of a piece for piano and electronics from 1999. The idea was to create a prepared piano without physical preparations in the instrument and the possibility that the preparations change, sometimes quickly.

For ‘Das Bleierne Klavier’, there was not a written score but verbal descriptions for the relationship between instrument and electronics in each of the 32 sections.

That gave me a lot of freedom to explore new ideas during each concert and over time they converged to a model. Each new interpretation followed the models general lines, but with local explorations. Several pianists asked me to be able to play the work. I rehearsed with a few, but I did not get exactly what I wanted as their knowledge of the interaction between the two media was naturally limited

and could not inform their improvisations.

Therefore, in 2007 I wrote a version that was very close to my ideal model.

But, once the material existed on paper, I saw temporal relationships in a different dimension. While improvising, I had a different view of the proportions. I began therefore to reconstruct some aspects and the written version ‘Zellen-Linien’ emerged.

Let’s look at some techniques to recover the gesture of the performer. The first phrase that I experimented with is based on the ‘F5’. I wanted to add to each ‘F5’ on the piano a different recording of a ‘F5’ prepared piano sound. There is also a note of ‘B5’ in this musical line, which should not double with a pre-recorded sound. The computer thus needed to distinguish between the two different pitches.

I spent a lot of time to search for a pitch tracking solution, but without success. The sound of a piano note is very noisy during the attack. I could have delayed the analysis by 30 ms to concentrate more on the harmonic portion of the note, but in that case the decision about its pitch, and subsequently the playback of the prepared sound would also have been delayed.

So I found myself in this loop where I was no longer questioned the idea.

Once I realized this, I changed for another approach: musicians have the incredibly fine ability to control dynamics of their playing. I could just write the phrase for the piano to play the notes of ‘F’ with a dynamic ‘mf’ and the note ‘B’ with a dynamic ‘piano’. I was thus developing an envelope follower.

As soon as the signal exceeds a threshold, the follower detects it. I can connect, among other possibilities, the playback of a sound file to this action. In the case of this phrase I actually connect it to a collection of sounds: each new moment of attack will trigger a different prepared sound.

The envelope follower has a second parameter: the time that the signal must remain below the threshold for the next attack to be considered. This could help to avoid double attacks, but can also serve as a compositional parameter. In a very dense texture for example, only the notes are taken, which were preceded by a moment of silence.

I’m playing you the sound example of this phrase.

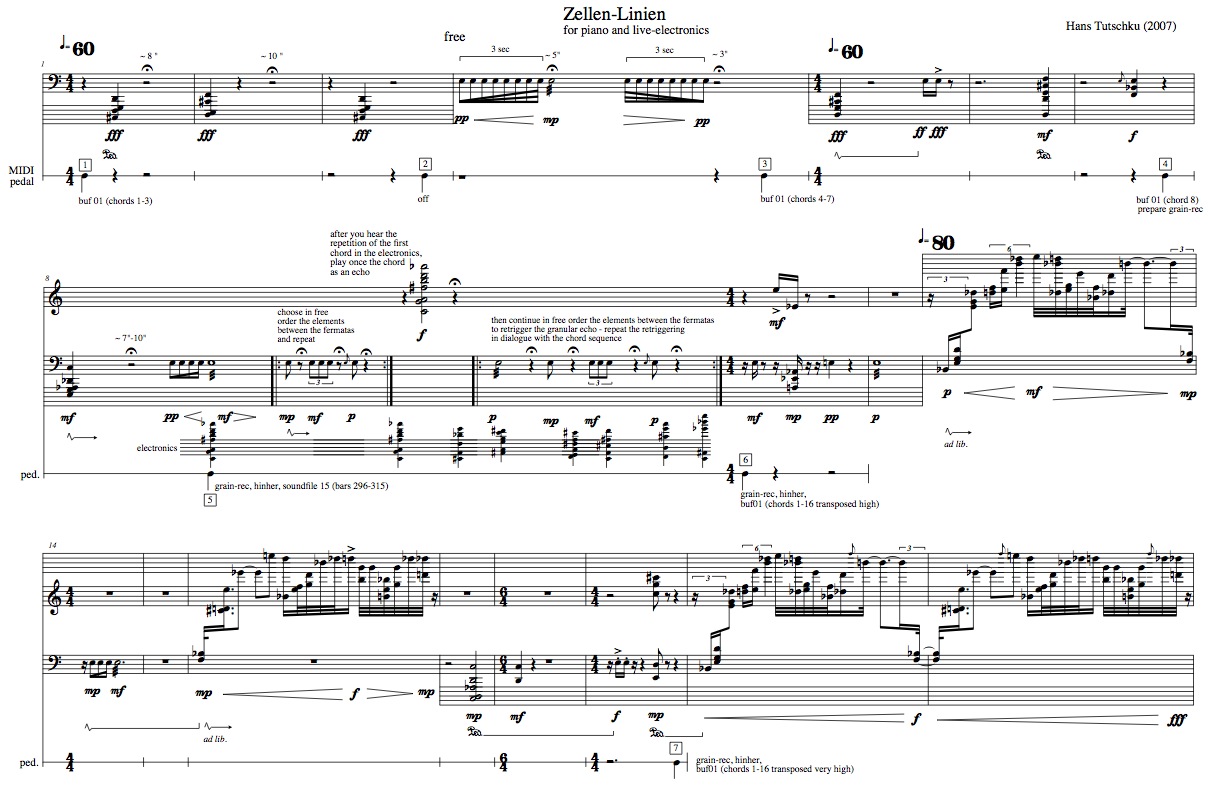

This is the beginning of the score. The work starts with a few chords in the bass that trigger sound files by the same envelope follower. It gives the impression of a real-time processing, but the sounds are prepared files, synthesis results with the Diphone software.

In the second system, you see a chord progression in the electronics. After the second repetition of the first chord, the pianist will play the same chord, like an echo of the electronics. This is something that interests me a lot.

If we look at compositions for instruments and tape from the 50th, 60th, 70th we see that they have often a complex temporal relationships between the instrument and the electronics. Once the real-time processing became accessible, we often observe a sort of temporal impasse, where the electronics are only happening at the same moment as the instrument – e.g. for transposition – or later. I tried therefore a combination of pre-recorded sounds and real-time processing to obtain a multidimensional time network, where electronics can also introduce musical material.

I play you the beginning of the composition in a recording with Sebastian Berweck:

At the end of the piece, it’s the pianist who will play the sequence of chords. I use the amplitude follower to trigger a ‘freeze’ mechanism, which records a short moment of the chords spectrum that is maintained thereafter. This sound is doubled with a granular synthesis, which also is controlled by the intensity of the played chords.